Introduction:

Artificial Intelligence is rapidly evolving, promising to revolutionize how we live and work. Yet, despite the hype, many AI models still struggle with a critical flaw: a lack of context and the tendency to “hallucinate” information, providing inaccurate or nonsensical answers. Enter Retrieval-Augmented Generation (RAG), a groundbreaking technique that’s changing the game by marrying the power of search with the creativity of AI. This blog post dives deep into how RAG enhances AI reliability and context-awareness, making it a truly useful tool.

The Problem with Vanilla AI: Context Collapse and Hallucinations

Traditional AI models, especially Large Language Models (LLMs), are trained on massive datasets. However, they often lack access to real-time, specific information, and can be prone to “hallucinations” – generating plausible-sounding but incorrect information. This is because their knowledge is limited to the data they were trained on. If a user asks a question about a recent event or a niche topic outside the training data, the model might struggle, making up an answer based on its internal patterns, leading to unreliable outputs. This “context collapse” diminishes the usefulness of AI in critical applications where accuracy is paramount.

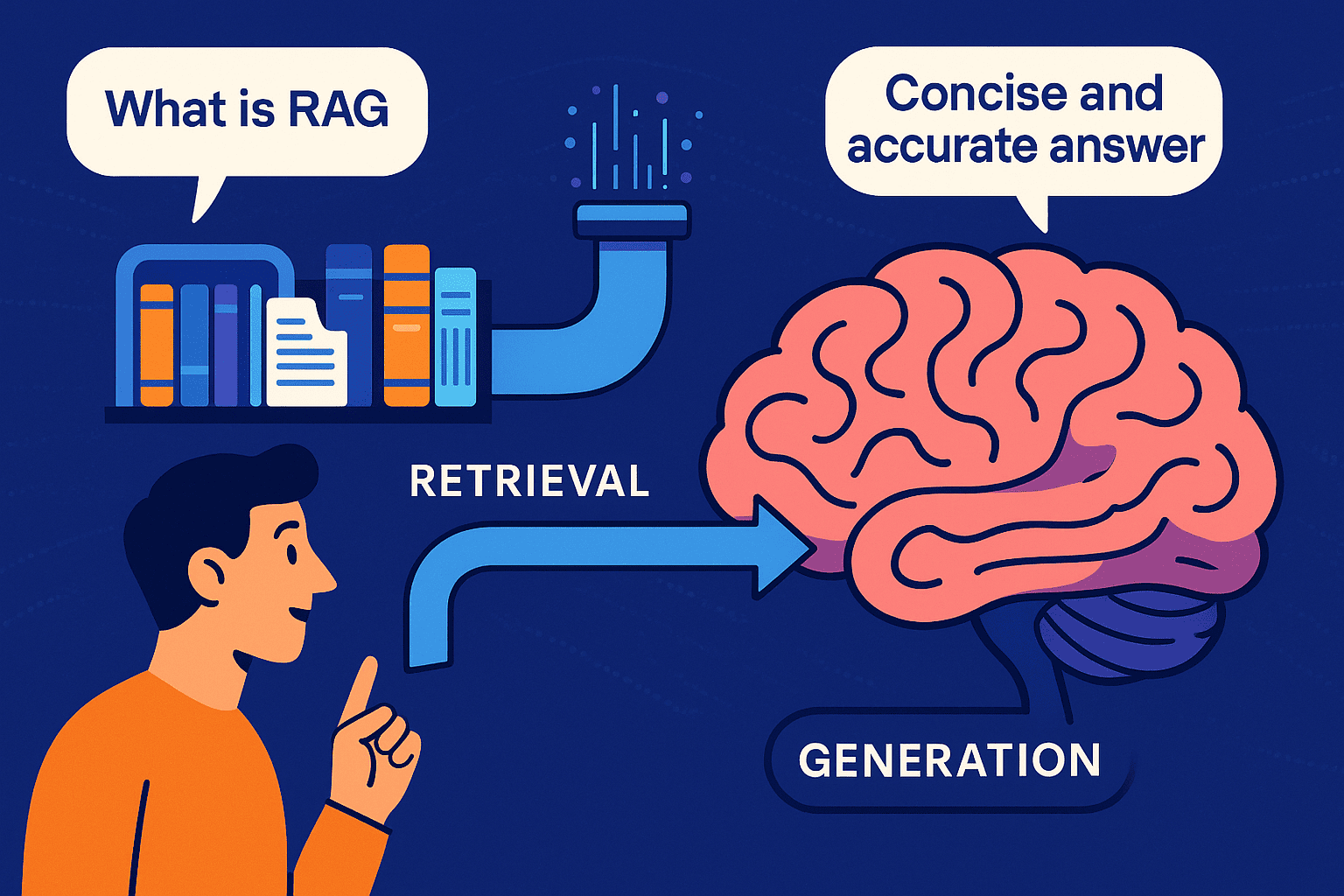

RAG: Bridging the Gap Between Search and Generation

RAG solves this problem by combining two key processes: retrieval and generation. First, when a user asks a question, the RAG system uses a retrieval component (like a search engine) to find relevant information from a knowledge base or external sources. This information acts as context. Next, the generation component, often an LLM, uses the retrieved context alongside the user’s prompt to formulate a more informed and accurate response. This allows the AI to leverage up-to-date information and understand the nuances of specific queries.

Benefits of RAG: Accuracy, Relevance, and Transparency

The advantages of RAG are numerous. Primarily, it boosts accuracy by grounding responses in reliable data. Because the AI is not relying solely on its internal knowledge, it’s less likely to invent information. Secondly, RAG enhances relevance. By retrieving context directly related to the user’s query, the AI can provide more targeted and helpful answers. Finally, RAG promotes transparency. Users can often see the sources used to generate the response, making the process more understandable and trustworthy. This is particularly valuable in applications where traceability and accountability are essential, such as legal research or scientific analysis.

Real-World Applications and the Future of RAG

RAG is already making a significant impact across various industries. Examples include:

- Customer Service: Providing chatbots with access to up-to-date product information.

- Medical Diagnosis: Assisting doctors by retrieving the most relevant medical literature for a patient’s condition.

- Content Creation: Enabling writers to find accurate and relevant information for their articles.

- Financial Analysis: Providing analysts with real-time market data and reports.

As AI technology advances, RAG is expected to become even more sophisticated. Future developments will likely include improved retrieval methods, more efficient integration of different data sources, and even better ways to explain the reasoning behind the AI’s responses. This will solidify RAG’s position as a crucial technique for building AI systems that are not only powerful but also reliable and trustworthy